A bulk trigger lets you process multiple record changes at once when they're bundled together (for example, during a CSV import or grid edit). Instead of handling each record with a separate pipeline, all the changes are processed together in one pipeline as an array.

A bulk trigger can also handle individual record changes (for example, interactive updates or isolated events). In these cases, the array of data received only contains one item.

Bulk triggers can process data in parallel. If a source system sends data in multiple batches, each batch will trigger its own pipeline to process the array of data received.

In this article

Use cases

Bulk triggers are useful when there is a need to handle multiple record changes, regardless of the amount of data changed, as a single action. Some use cases include:

- Prerequisites validation

- Dependencies provisioning (ensuring a parent-child relationship exists)

- Change process coordination (triggering one notification or task per change-operation)

Processing an array in a single pipeline improves efficiency, reduces the number of requests made to external systems, and minimizes transient error scenarios caused by processing records one at a time.

Add a bulk trigger step

Add a bulk trigger step from the Quickbase channel:

- Create a pipeline

- Select the Quickbase channel

- Select the On New Bulk Event trigger

- Configure when the step should trigger

The On New Bulk Event trigger reacts on one or more record changes in а Quickbase table. It receives a single webhook, containing all records that were created, edited or deleted. You can configure the step to trigger on any of combination of record changes (for example, record added and modified, or record added, modified, and deleted). As part of the output of the pipeline, you'll see the total number of record changes included with the webhook.

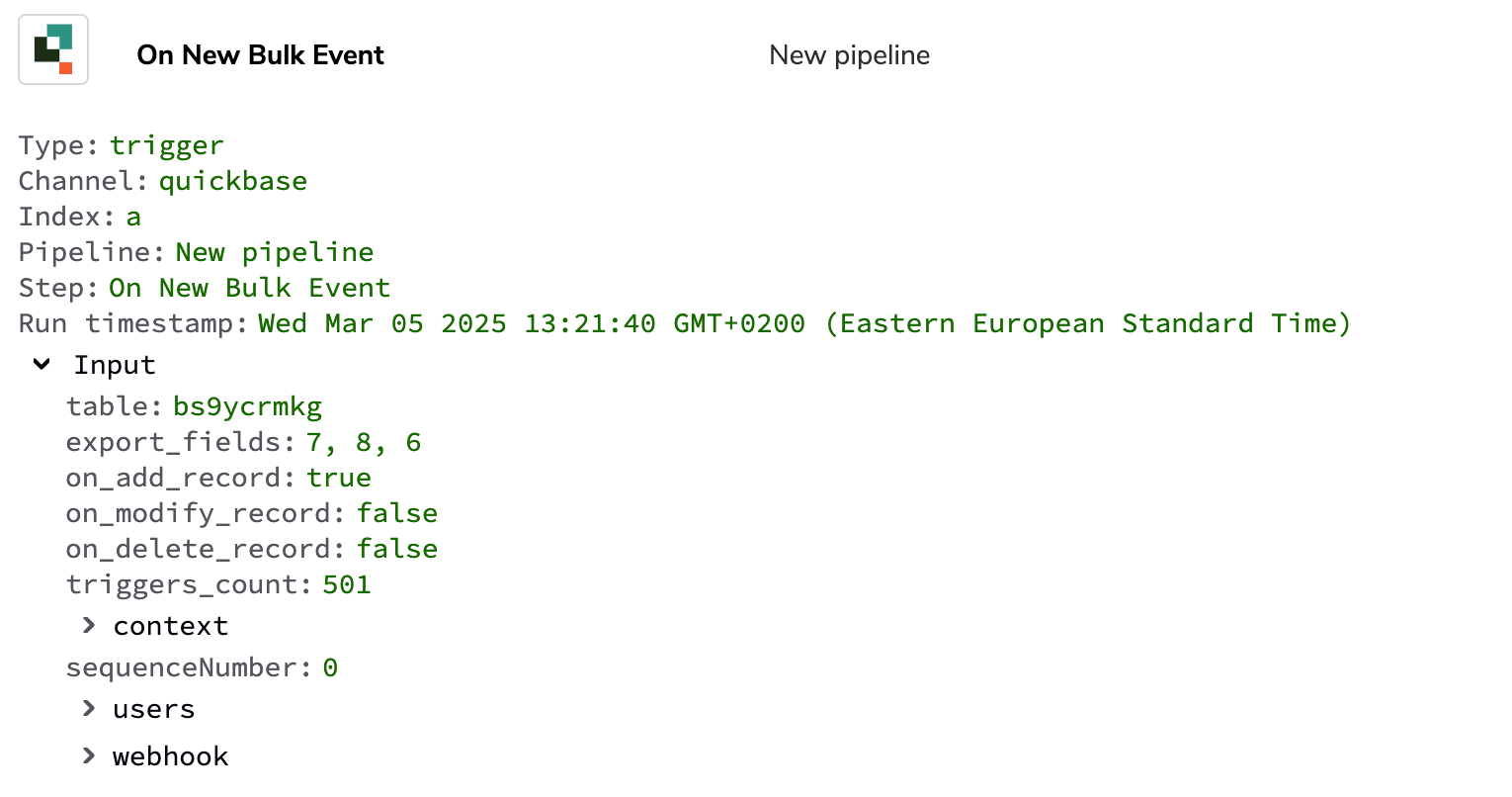

Here’s an example of what activity for the On New Bulk Event trigger could look like:

Process bulk data in a pipeline

The output of the On New Bulk Event trigger step is an array of data. To process the data, you can use a loop to iterate through each record and perform an appropriate action. Learn how to use a loop in the bulk trigger step.

All events are packaged in one activity log for the run of the pipeline, making it easier to locate related activity and debug issues if needed.

Filter limits

Supported filters are: equals, does not equal, is, is not, contains, does not contain, starts with, does not start with, is greater than, is less than, is before, is after, is set, is not set, is empty, is not empty

Unsupported fields you cannot include in filters:

- Duration, Multiline text

- Nested fields are not supported. However,

user.emailis supported when you use these conditions: equals, does not equal, is set, is not set